Host challenge¶

EvalAI supports hosting challenges with different configurations. Challenge organizers can choose to customize most aspects of the challenge but not limited to:

- Evaluation metrics

- Language/Framework to implement the metric

- Number of phases and data-splits

- Daily / monthly / overall submission limit

- Number of workers evaluating submissions

- Evaluation on remote machines

- Provide your AWS credentials to host code-upload based challenge

- Show / hide error bars on leaderboard

- Public / private leaderboards

- Allow / block certain email addresses to participate in the challenge or phase

- Choose which fields to export while downloading challenge submissions

We have hosted challenges from different domains such as:

- Machine learning (2019 SIOP Machine Learning Competition)

- Deep learning (Visual Dialog Challenge 2019 )

- Computer vision (Vision and Language Navigation)

- Natural language processing (VQA Challenge 2019)

- Healthcare (fastMRI Image Reconstruction )

- Self-driving cars (CARLA Autonomous Driving Challenge)

We categorize the challenges in two categories:

Prediction upload based challenges: Participants upload predictions corresponding to ground truth labels in the form of a file (could be any format:

json,npy,csv,txtetc.)Some of the popular prediction upload based challenges that we have hosted are shown below:

If you are interested in hosting prediction upload based challenges, then click here.

Code-upload based challenges: In these kind of challenges, participants upload their training code in the form of docker images using EvalAI-CLI.

We support two types of code-upload based challenges -

- Code-Upload Based Challenge (without Static Dataset): These are usually reinforcement learning challenges which involve uploading a trained model in form of docker images and the environment is also saved in form of a docker image.

- Static Code-Upload Based Challenge: These are challenges where the host might want the participants to upload models and they have static dataset on which they want to run the models and perform evaluations. This kind of challenge is especially useful in case of data privacy concerns.

We support two types of code-upload based challenges -

- Code-Upload Based Challenge (without Static Dataset): These are usually reinforcement learning challenges which involve uploading a trained agent in form of docker images and the environment is also saved in form of a docker image.

- Static Code-Upload Based Challenge: These are challenges where the host might want the participants to upload models and they have static dataset on which they want to run the models and perform evaluations. This kind of challenge is especially useful in case of data privacy concerns.

Some of the popular code-upload based challenges that we have hosted are shown below:

If you are interested in hosting code-upload based challenges, then click here. If you are interested in hosting static code-upload based challenges, then click here.

A good reference would be the Habitat Re-arrangement Challenge 2022.

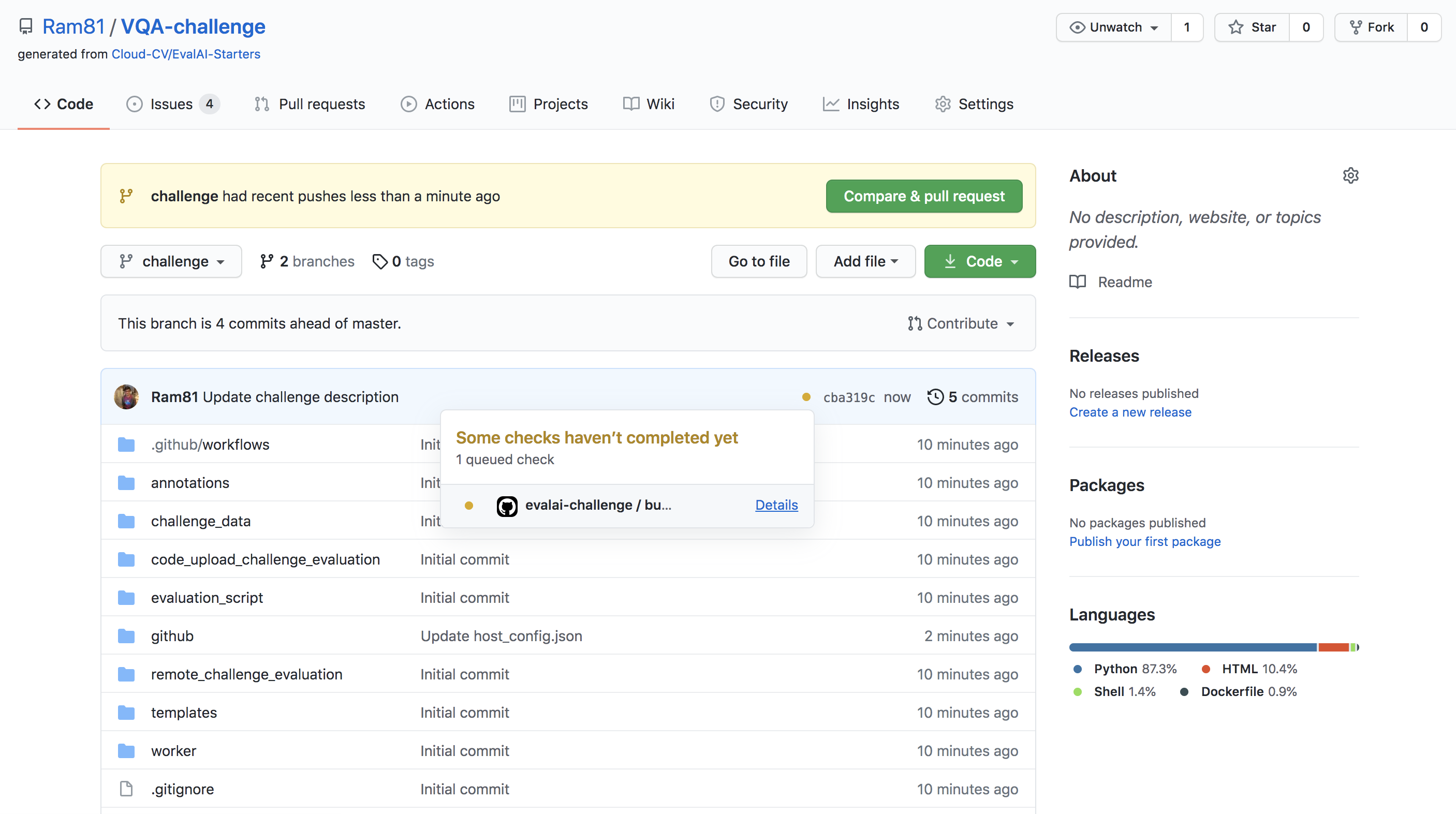

Host challenge using github¶

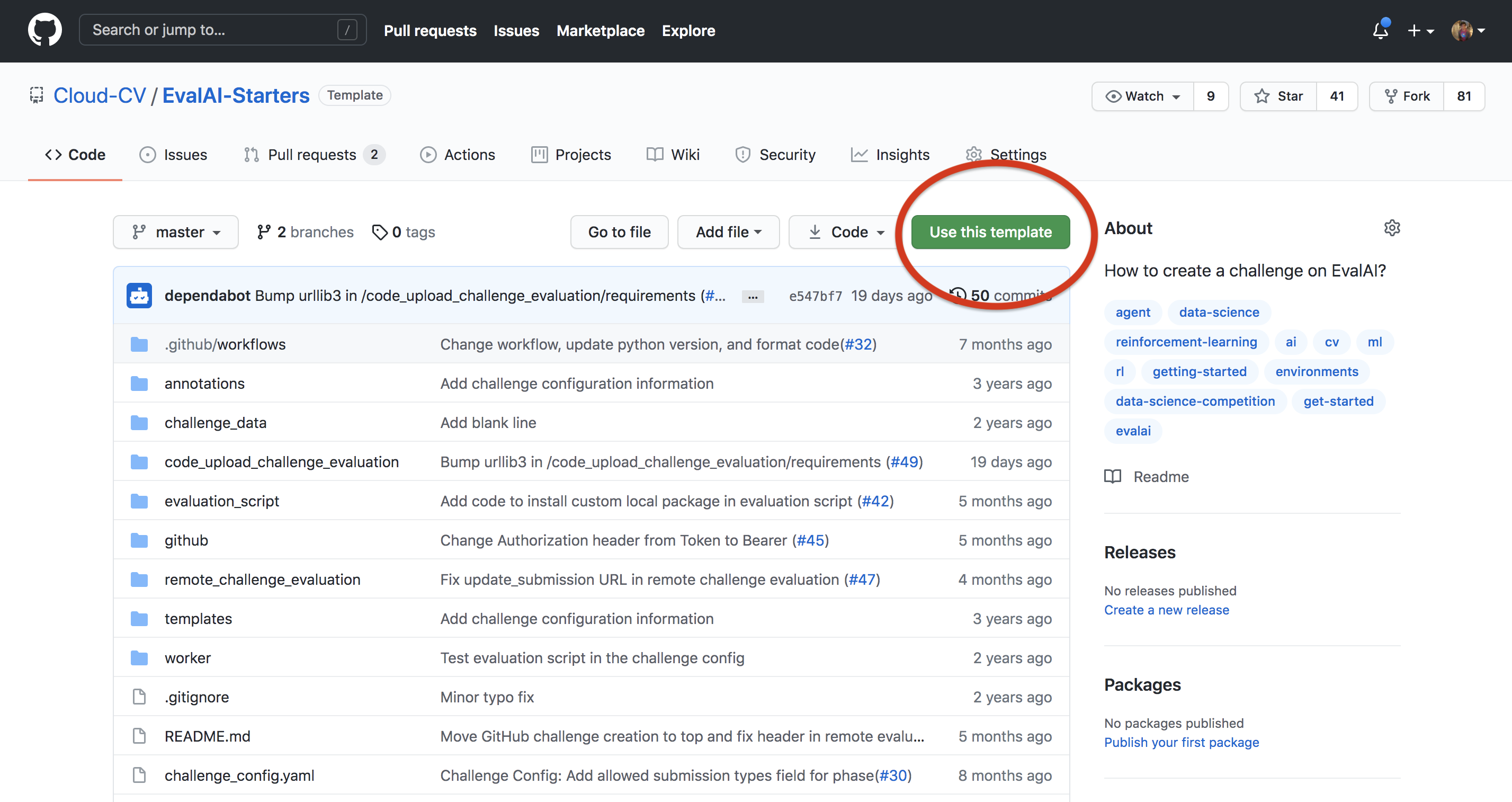

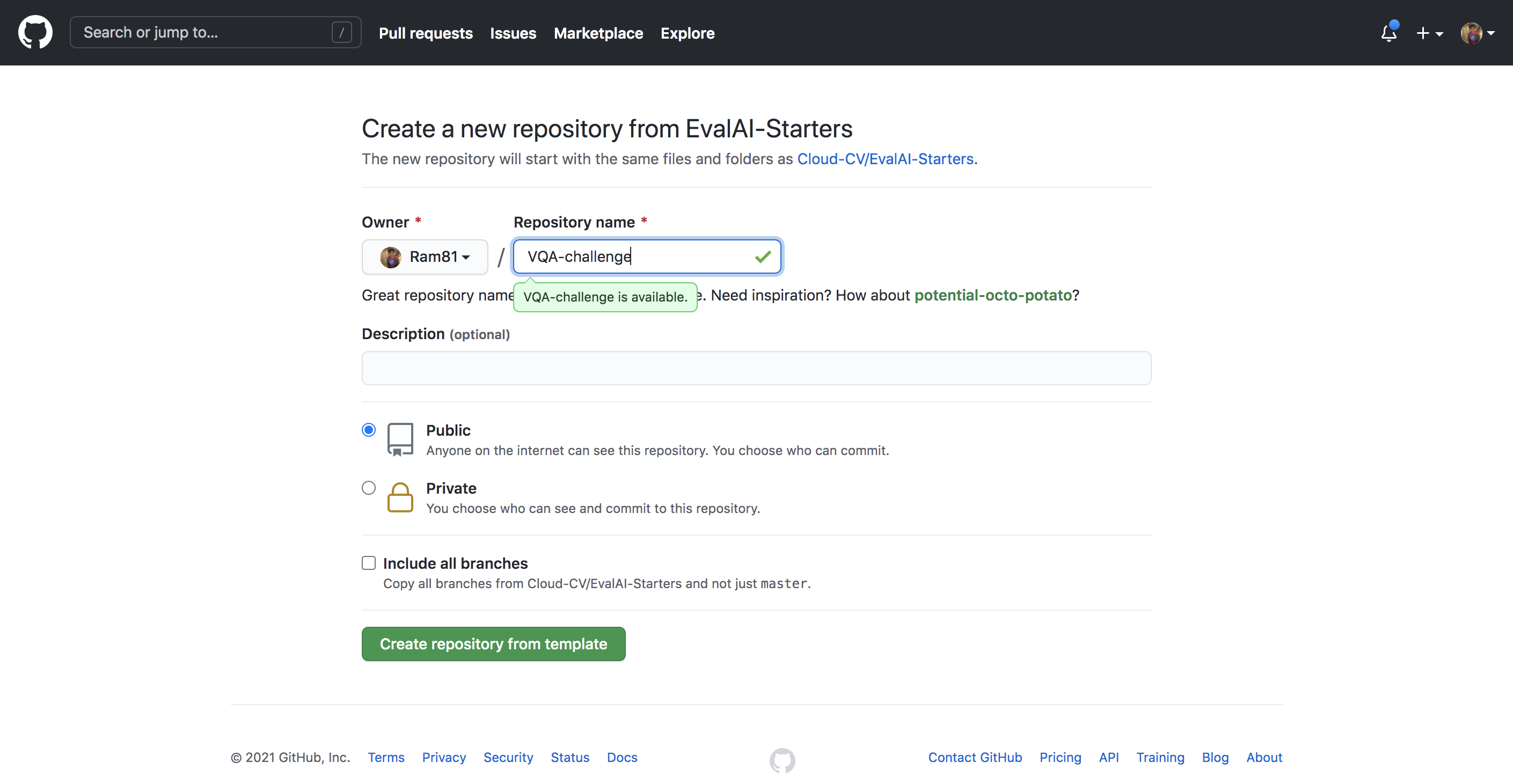

Step 1: Use template¶

Use EvalAI-Starters template. See this on how to use a repository as template.

Step 2: Generate github token¶

Generate your github personal acccess token and copy it in clipboard.

Add the github personal access token in the forked repository’s secrets with the name AUTH_TOKEN.

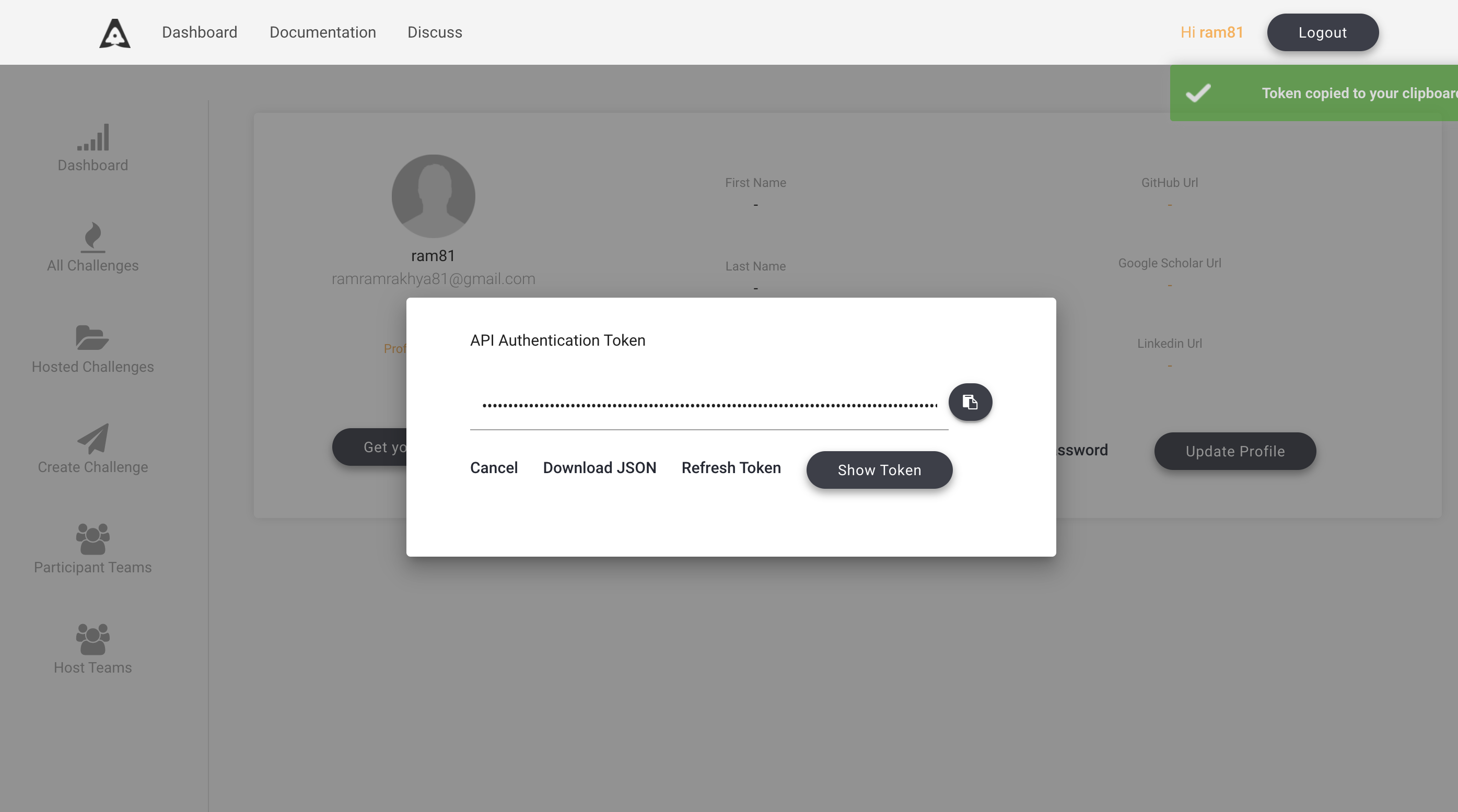

Step 3: Setup host configuration¶

Now, go to EvalAI to fetch the following details -

evalai_user_auth_token- Go to profile page after logging in and click onGet your Auth Tokento copy your auth token.host_team_pk- Go to host team page and copy theIDfor the team you want to use for challenge creation.evalai_host_url- Usehttps://eval.aifor production server andhttps://staging.eval.aifor staging server.

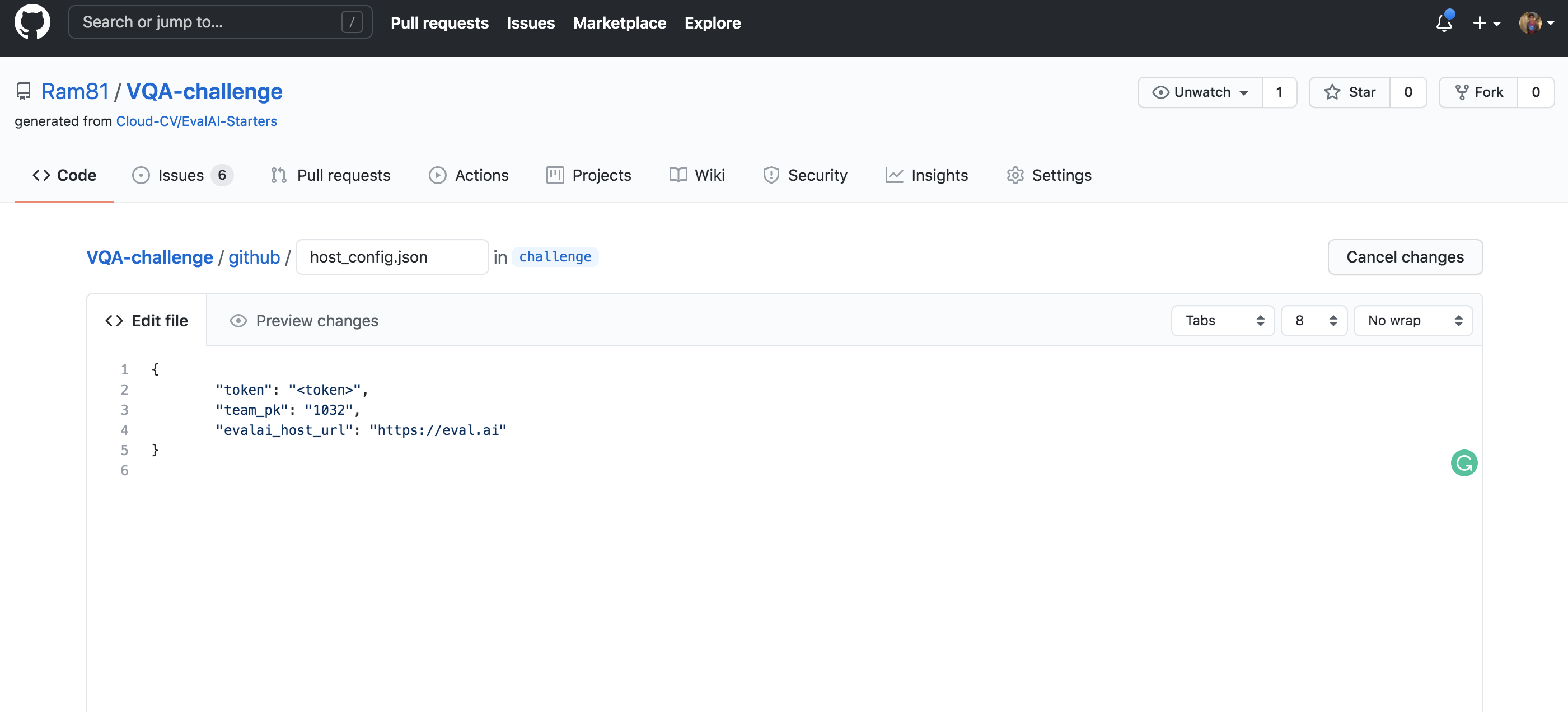

Step 4: Setup automated update push¶

Create a branch with name challenge in the forked repository from the master branch.

Note: Only changes in challenge branch will be synchronized with challenge on EvalAI.

Add evalai_user_auth_token and host_team_pk in github/host_config.json.

Step 5: Update challenge details¶

Read EvalAI challenge creation documentation to know more about how you want to structure your challenge. Once you are ready, start making changes in the yaml file, HTML templates, evaluation script according to your need.

Step 6: Push changes to the challenge¶

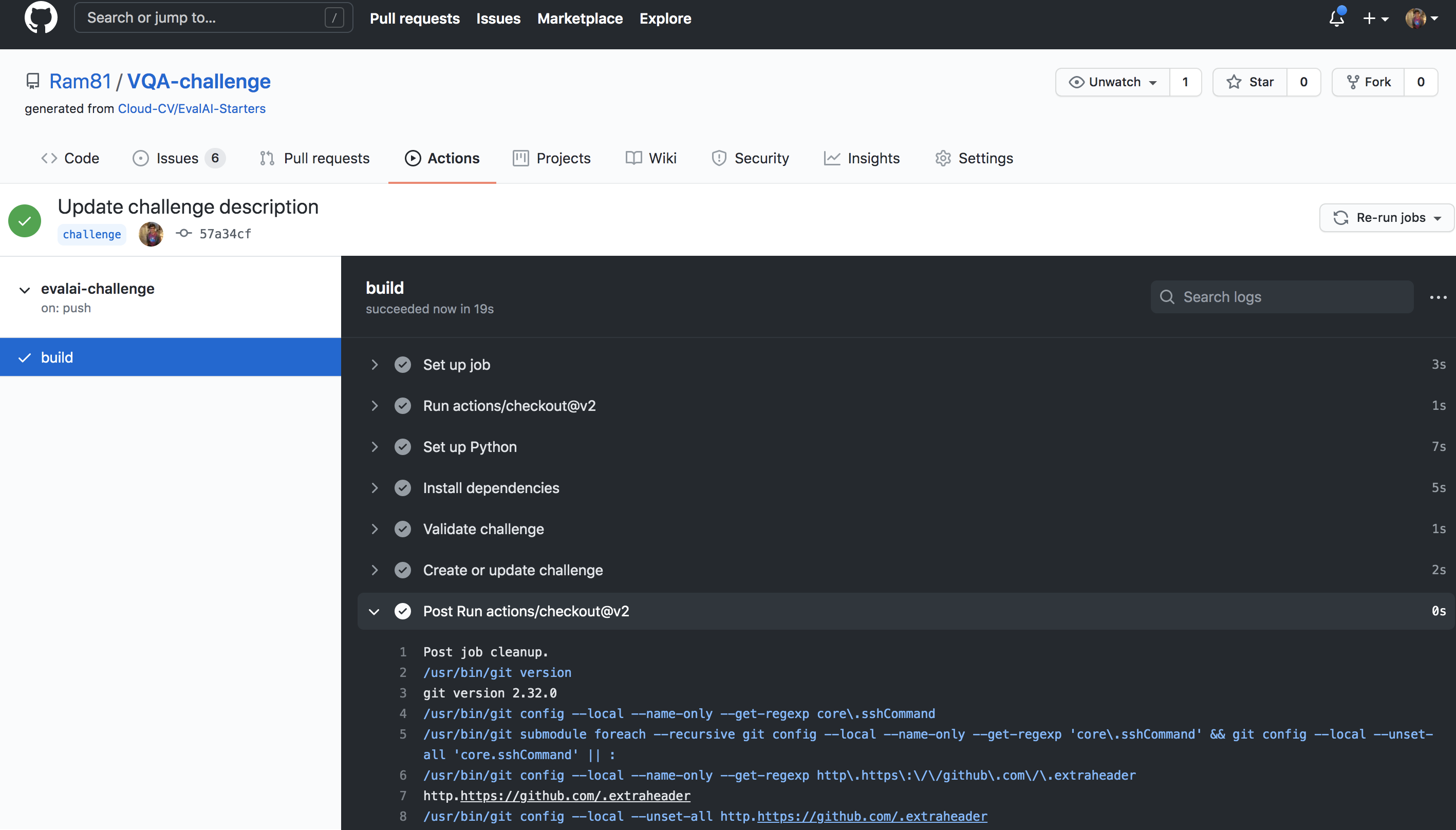

Commit the changes and push the challenge branch in the repository and wait for the build to complete. View the logs of your build.

If challenge config contains errors then a issue will be opened automatically in the repository with the errors otherwise the challenge will be created on EvalAI.

Step 7: Verify challenge¶

Go to Hosted Challenges to view your challenge. The challenge will be publicly available once EvalAI admin approves the challenge.

To update the challenge on EvalAI, make changes in the repository and push on challenge branch and wait for the build to complete.

Host prediction upload based challenge¶

Step 1: Setup challenge configuration¶

We have created a sample challenge configuration that we recommend you to use to get started. Use EvalAI-Starters template to start. See this on how to use a repository as template.

Step 2: Edit challenge configuration¶

Open challenge_config.yml from the repository that you cloned in step-1. This file defines all the different settings of your challenge such as start date, end date, number of phases, and submission limits etc.

Edit this file based on your requirement. For reference to the fields, refer to the challenge configuration reference section.

Step 3: Edit evaluation script¶

Next step is to edit the challenge evaluation script that decides what metrics the submissions are going to be evaluated on for different phases.

Please refer to the writing evaluation script to complete this step.

Step 4: Edit challenge HTML templates¶

Almost there. You just need to update the HTML templates in the templates/ directory of the bundle that you cloned.

EvalAI supports all kinds of HTML tags which means you can add images, videos, tables etc. Moreover, you can add inline CSS to add custom styling to your challenge details.

Congratulations! you have submitted your challenge configuration for review and EvalAI team has notified about this. EvalAI team will review and will approve the challenge.

If you have issues in creating a challenge on EvalAI, please feel free to contact us at team@cloudcv.org create an issue on our GitHub issues page.

Host code-upload based challenge¶

Step 1: Setup challenge repository¶

Steps to create a code-upload based challenge are somewhat similar to what it takes to create a prediction upload based challenge.

We have created a sample challenge repository that we recommend you to use to get started. Use EvalAI-Starters template to start. See this on how to use a repository as template.

Step 2: Edit challenge configuration¶

Open challenge_config.yml from the repository that you cloned in step-1. This file defines all the different settings of your challenge such as start date, end date, number of phases, and submission limits etc. Edit this file based on your requirement.

Please ensure the following fields are set to the following values for code-upload based challenges:

remote_evaluation : Trueis_docker_based : True

In order to perform evaluation, you might also need to create an EKS cluster on AWS. This is because we expect to use docker containers for both - the agent, and the environment. See AWS Elastic Kubernetes Service to learn more about what EKS is and how it works.

We need the following details for the EKS cluster in order to perform evaluations in case you are using your own AWS account:

aws_account_id: <AWS Account ID>aws_access_key_id: <AWS Access Key ID>aws_secret_access_key: <AWS Secret Access Key>aws_region: <AWS Region>

These details need to be emailed us at team@cloudcv.org. The EvalAI team will set up the infrastructure in your AWS account. For reference to the other fields, refer to the challenge configuration reference section.

Step 3: Edit evaluation code¶

Next step is to create code-upload challenge evaluation that decides what metrics the submissions are going to be evaluated on for different phases.

For code-upload challenges, the environment image is expected to be created by the host and the agent image is to be pushed by the participants.

Please refer to the Writing Code-Upload Challenge Evaluation section to complete this step.

Step 4: Edit challenge HTML templates¶

Almost there. You just need to update the HTML templates in the templates/ directory of the bundle that you cloned.

EvalAI supports all kinds of HTML tags which means you can add images, videos, tables etc. Moreover, you can add inline CSS to add custom styling to your challenge details.

Please include a detailed submission_guidelines.html as it is usually not as straightforward for the participants to upload submissions for code-upload challenges.

The participants are expected to submit links to their agent docker images using evalai-cli. Here is an example of a command:

evalai push <image>:<tag> --phase <phase_name>

Please refer to the documentation for more details on this.

A good example of submission guidelines for code-upload challenges is present here.

Congratulations! you have submitted your challenge configuration for review and EvalAI team has notified about this. EvalAI team will review and will approve the challenge.

Host static code-upload based challenge¶

Step 1: Setup challenge repository¶

Steps to create a static code-upload based challenge are very similar to what it takes to create a prediction upload based challenge and code-upload based challenge.

We have created a sample challenge repository that we recommend you to use to get started. Use EvalAI-Starters template to start. See this on how to use a repository as template.

Step 2: Edit challenge configuration¶

Open challenge_config.yml from the repository that you cloned in step-1. This file defines all the different settings of your challenge such as start date, end date, number of phases, and submission limits etc. Edit this file based on your requirement.

Please ensure the following fields are set to the following values for static code-upload based challenges:

remote_evaluation : Trueis_docker_based : Trueis_static_dataset_code_upload : True

In order to perform evaluation, you might also need to create an EKS cluster on AWS. This is because we use docker containers for managing the evaluation environment, and as well as model container. See AWS Elastic Kubernetes Service to learn more about what EKS is and how it works.

We need the following details for the EKS cluster in order to perform evaluations in case you are using your own AWS account:

aws_account_id: <AWS Account ID>aws_access_key_id: <AWS Access Key ID>aws_secret_access_key: <AWS Secret Access Key>aws_region: <AWS Region>

These details need to be emailed us at team@cloudcv.org. The EvalAI team will set up the infrastructure in your AWS account.

For reference to the other fields, refer to the challenge configuration reference section.

Step 3: Save the static dataset using EFS¶

Use AWS EFS to store the static dataset file(s) on which the evaluation is to be performed. By default an EFS file system is created and the file system ID is stored in efs_id in the Challenge Evaluation Cluster and then the file system is mounted on the instances inside the cluster.

Step 4: Create a sample submission Dockerfile¶

Create a Dockerfile showing the participants how to install their requirements and run the submission script which produces the predictions file. This docker image is then run on the cluster to perform predictions.

An template Dockerfile is shown below:

FROM nvidia/cuda:11.2.0-cudnn8-runtime-ubuntu20.04

RUN apt-get update &&\

DEBIAN_FRONTEND=noninteractive apt-get install -y python3 &&\

apt-get install -y

# ADITIONAL PYTHON DEPENDENCIES

COPY requirements.txt ./

RUN pip install -r requirements.txt

WORKDIR /app

# COPY WHATEVER OTHER SCRIPTS YOU MAY NEED

COPY trained_model /trained_model

# SPECIFY THE ENTRYPOINT SCRIPT

CMD ["python", "-u", "submission.py"]

This docker image will run submission.py script and the script will save the predictions at the specified location.

Step 5: Edit evaluation script¶

Next step is to write the evaluation script to compute the metrics for each submission.

Please refer to the Writing Static Code-Upload Challenge Evaluation section to complete this step.

Step 6: Prepare detailed documentation¶

Prepare a detailed documentation describing the following details:

- Input/Output Format: Details about the model input format and the expected model output format for the participants with examples.

- Expected Input/Output Files Names: The documentation should also contain where the dataset is expected to be stored in the docker container, where to save the file, and the output file name.

- Docker commands/script: The docker commands/script to create the docker container from the Docker file.

- Submission Command: The

evalai-clicommand to push the container. - Any other installation, processing, training tips required for the task.

It is recommended to look at the example of My Seizure Gauge Forecasting Challenge 2022 which contain extensively described steps and documentation for everything, along with tips for every step of challenge participation.

Step 7: Edit challenge HTML templates¶

Almost there. You just need to update the HTML templates in the templates/ directory of the bundle that you cloned.

EvalAI supports all kinds of HTML tags which means you can add images, videos, tables etc. Moreover, you can add inline CSS to add custom styling to your challenge details.

Please include a detailed submission_guidelines.html as it is usually not as straightforward for the participants to upload submissions for static code-upload challenges.

The participants are expected to submit links to their model docker images using evalai-cli. Here is an example of a command:

evalai push <image>:<tag> --phase <phase_name>

Please refer to the documentation for more details on this.

A good example of submission guidelines is present here.

Congratulations! you have submitted your challenge configuration for review and EvalAI team has notified about this. EvalAI team will review and will approve the challenge.

Host a remote evaluation challenge¶

Step 1: Set up the challenge¶

Follow host challenge using github section to set up a challenge on EvalAI.

Step 2: Edit challenge configuration¶

Set the remote_evaluation parameter to True in challenge_config.yaml. This challenge config file defines all the different settings of your challenge such as start date, end date, number of phases, and submission limits etc.

Edit this file based on your requirement. For reference to the fields, refer to the challenge configuration reference section.

Please ensure the following fields are set to the following values:

remote_evaluation : True

Refer to the following documentation for details on challenge configuration.

Step 3: Edit remote evaluation script¶

Next step is to edit the challenge evaluation script that decides what metrics the submissions are going to be evaluated on for different phases. Please refer to Writing Remote Evaluation Script section to complete this step.

Step 4: Set up remote evaluation worker¶

Create conda environment to run the evaluation worker. Refer to conda’s create environment section to set up a virtual environment.

Install the worker requirements from the

EvalAI-Starters/remote_challenge_evaluationpresent here:cd EvalAI-Starters/ pip install remote_challenge_evaluation/requirements.txt

Start evaluation worker:

cd EvalAI-Starters/remote_challenge_evaluation python main.py

If you have issues in creating a challenge on EvalAI, please feel free to contact us at team@cloudcv.org create an issue on our GitHub issues page.